Blogs

Why 95% of AI projects fail (and what the successful 5% do differently)

The numbers don't lie: according to MIT research, only 5% of AI pilots reach production with measurable impact. Not because the technology doesn't work—but because organizations keep making the same mistake.

And that mistake is not what you think.

The problem no one mentions: AI doesn't learn by itself

New employees get better over a few months. They pick up signals, adapt, learn from feedback.

AI does not.

An AI assistant performs exactly the same on day 180 as on day 1, unless someone actively maintains that feedback loop. MIT calls this the learning gap: the difference between how people learn and how AI tools stagnate without guidance.

At an insurance company, we solved this by mapping out the process with the people doing the work. What is the input? What is the expected output? Then iteratively adjust the prompts, with those people present.

The result: an AI assistant that meets their standards. Because they determined what that standard is.

At a healthcare institution, we skipped that step. People started with too high expectations, the quality was disappointing, and they disengaged.

Same technology. Completely different result.

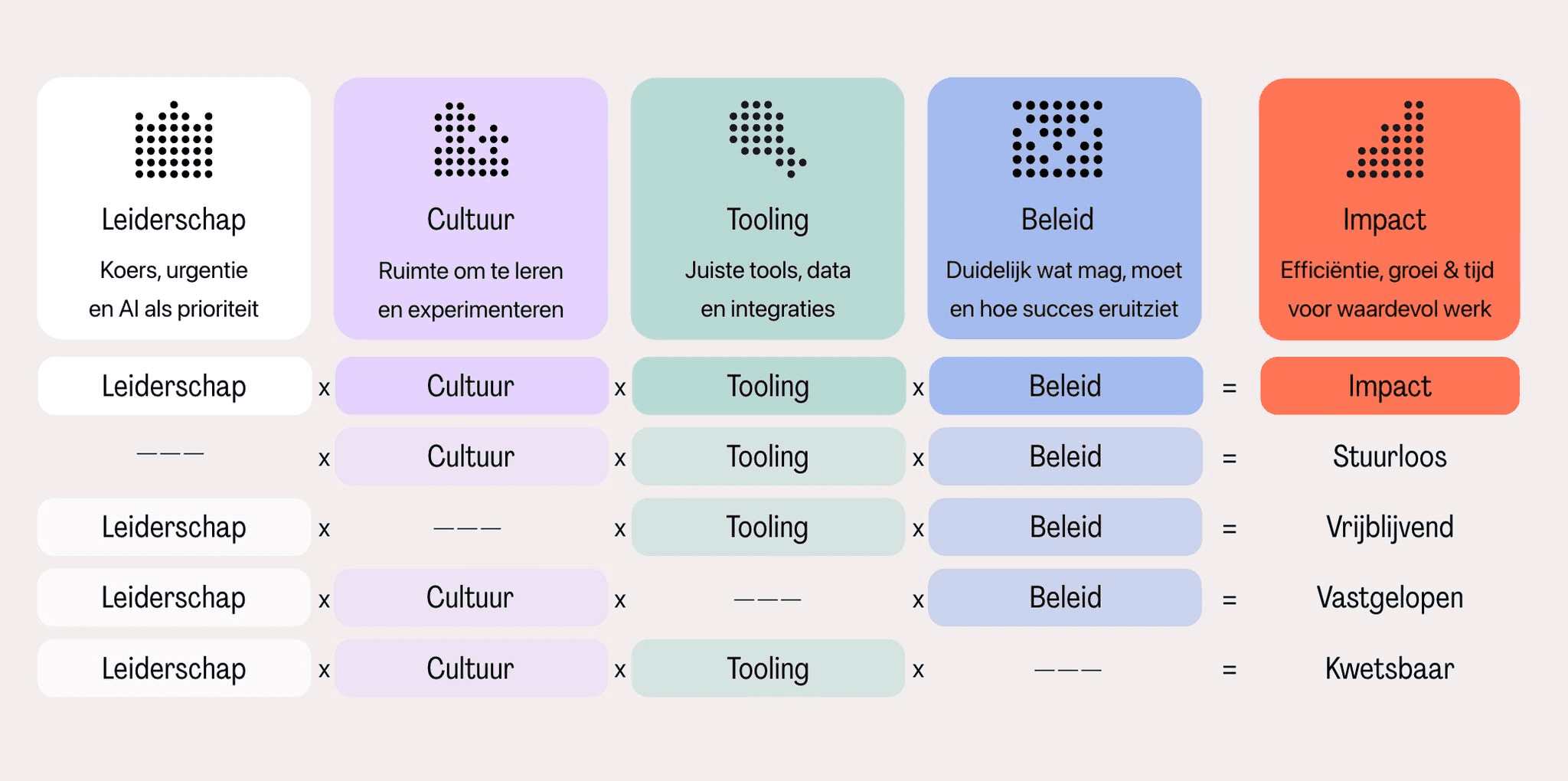

Five factors that determine whether AI implementation succeeds

Successful AI adoption requires five elements. Not three out of five—all five.

1. Leadership

Not just allocating budget, but being actively involved. Asking questions. Monitoring progress. Removing obstacles.

2. Culture

Space to experiment. Being allowed to make mistakes without being penalized. "I tried something with AI and it didn't work" needs to be an acceptable sentence.

3. Tooling

The right technical foundation. Access to data. Systems that communicate with each other. This is where most organizations start—but it's just one of five.

4. Policy

This is usually where things go wrong. What does it concretely mean if someone "works well with AI"? How do you measure that? How do you reward it? Without answers to these questions, AI remains a hobby rather than a working method.

5. Impact

Measurable results that tell the story. Not "we save time" but "this process took 4 hours, now 45 minutes." Numbers you can share.

Missing one factor? Then you get pilots that remain pilots forever.

Where it really gets stuck

You might think: technology. Or budget. Or employee resistance. But what we see most often is different.

Leadership makes it a priority. The culture is ready. The tools work. People are enthusiastic. And then it stalls at policy.

Because no one has defined what "good AI use" means. There is no way to measure it. No way to reward it. And so nothing structurally changes—even though everyone has the best intentions.

What the 5% does differently

The organizations that do succeed share three things:

They assign ownership

Not "everyone participates," but a concrete team: one expert who knows the content, one ambassador who builds support, one engineer who provides technical support.

And crucial: someone who literally 'manages' the AI agents. Who gathers feedback, improves prompts, and ensures the output continues to match the team's standard.

They start with processes, not tools

First determine: which processes are standardized enough to automate? Where is the variation that requires human judgment?

AI thrives on consistency. If ten people do the same work in ten different ways, AI cannot learn what "good" is.

They treat AI like a new colleague

That you need to train. That needs feedback. That only really gets up to speed after weeks—and only if someone provides that guidance.

It sounds soft, but it's the core: AI implementation is more like training people than installing software.

The lesson

AI projects don't fail because of bad technology. They fail for the same reason why reorganizations fail: no ownership, no feedback loop, no clear definition of success.

The 95% treats AI as a tool you install.

The 5% treats AI as a colleague you mentor.

Frequently asked questions

What percentage of AI projects fail?

According to MIT research from 2025, 95% of AI pilots never reach production with measurable ROI. Only 5% reach the stage where AI actually contributes to business results.

What is the learning gap in AI?

The learning gap describes the difference between human learning ability and AI stagnation. People improve naturally through experience; AI tools continue to perform exactly the same unless someone actively collects feedback and makes improvements.

What is the main reason AI implementation fails?

Not technology or budget, but missing policy. Organizations do not define what good AI use means, how you measure it, and how you reward it. As a result, adoption stalls with enthusiasts instead of becoming a company-wide working method.

Sources: MIT NANDA Report: The GenAI Divide 2025, Fortune: Why 95% of AI pilots fail