Blogs

From AI pilot to production: why scaling is almost always a human challenge

The pilot works. The results are good. The team is enthusiastic.

And then it stalls there.

Six months later, the same pilot is still running with the same five people. Scaling? It's coming. But it doesn't happen.

The problem is rarely technical. It's almost always human.

The human aspect is forgotten

Pilots start with a few enthusiasts. People who are curious, who want to experiment, who are eager to try out the tool.

But everything changes when scaling up.

Suddenly, dozens of people have to input data into an AI system. Check outputs. Adapt their working methods. And that is underestimated.

"Human in the loop" sounds simple. But at scale, it means: many people all having to maintain the same quality standard. The same way of checking. The same willingness to provide feedback.

That only succeeds if you involve those people from the beginning.

A pilot that stalled

One of our first projects stalled. The technology worked. The results were good. But the users didn't use the tool.

Why not?

They didn't agree with the output. Not because it was objectively bad—but because they weren't involved in determining the standard. Everyone had their own idea of what "good output" was. And so everyone made their own version.

It wasn't a technical blockade. It was a human blockade.

The enthusiasts had set the standard. The rest felt bypassed. And a tool that people don't want to use is no tool.

A pilot that did scale

With a large insurer, we did it differently.

We also started with enthusiasts. But we had each step validated by the larger group. Not afterward—during the process.

"This will be the new standard for this output. What do you think? What are we missing? Where are the exceptions?"

When we were ready to scale, everyone was already informed. More so: everyone had contributed to building the standard. The benefits had been translated into their benefits. And people were already convinced of the added value—inclusive of the awareness that it's not perfect, that experimentation remains necessary, and that their feedback only makes it better.

Result: broad adoption from day one.

The 80/20 of edge cases

Technically, you can quickly automate 80% of cases. The standard documents, the normal requests, the predictable input.

The challenge lies in the other 20%. Or actually: the 10% that is truly difficult.

Strange PDFs. Invoices with unusual layouts. Requests that are just a bit different. A human can handle these just fine—seeing immediately what it means. But AI struggles with them.

And here's the pitfall: pilots are often carried out with the "nice" documents. The examples that work well. That 80%.

Only when scaling do you encounter the edge cases. And if you're not prepared for those, quality and trust diminish.

So: test with the difficult cases during the pilot. Not only the easy ones.

Who owns scaling?

You need two people. Not two departments—two people with clear roles.

The enthusiast

Someone who engages with the people. Who builds support, answers questions, alleviates concerns. Who ensures that the larger group remains involved.

The expert

Someone recognized as a content expert within the department. Who defines what quality is. Who can judge if the output is good enough.

This combination—human and content—is crucial. Someone who is only enthusiastic without content authority gets no support. Someone who is only an expert without people skills gets no adoption.

Together they are the point of contact. The "manager" of the AI implementation.

The autonomy slider: from 0% to 97%

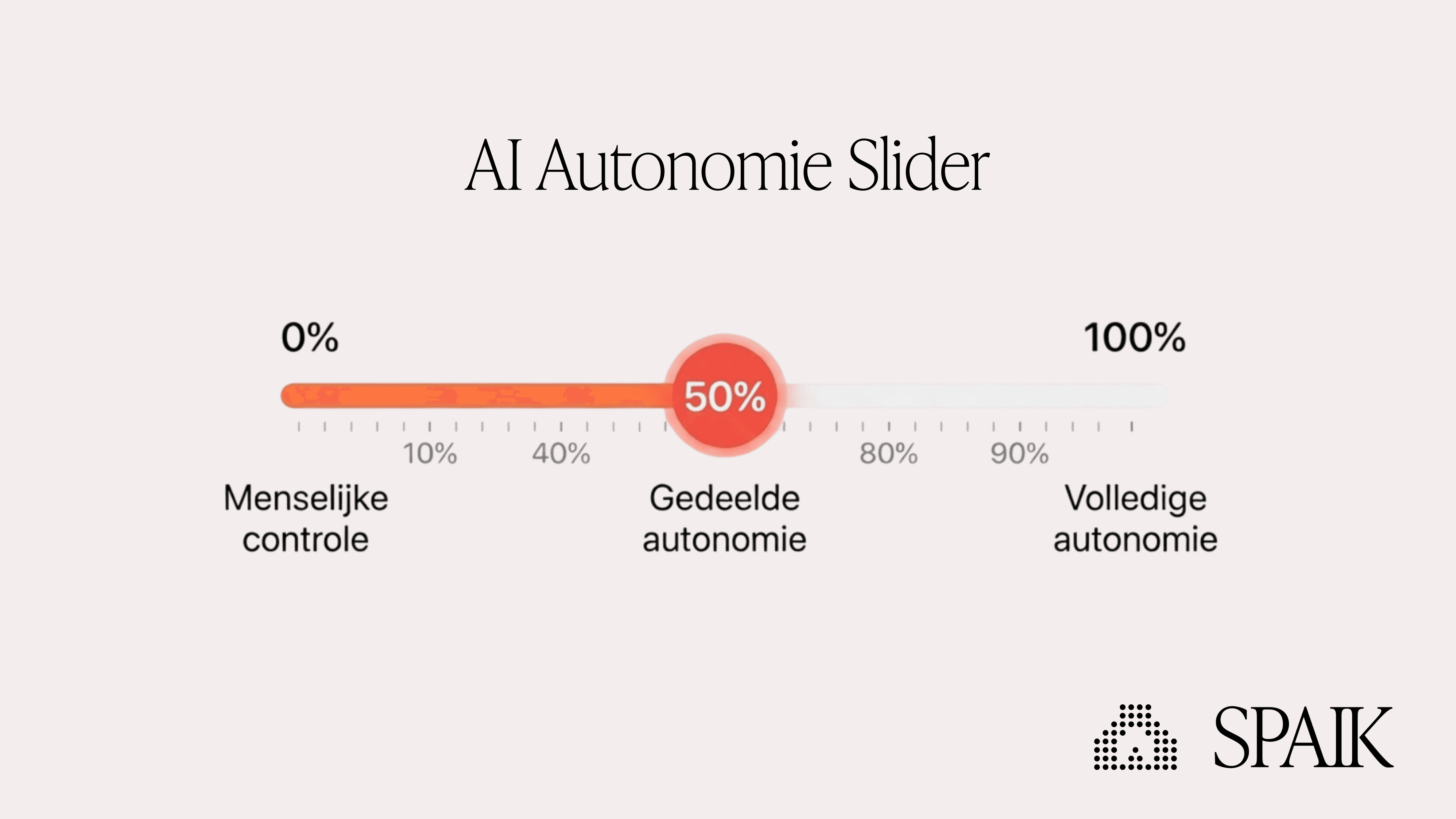

Think of AI autonomy as a slider. Left: 0%, full human control. Right: 100%, full AI autonomy.

In a pilot, you're somewhere around 50%. Shared autonomy. AI does the work, humans check everything.

To scale, you need to move right. Not to 100%—that's rarely realistic or desirable. But to 97%. A point where you trust AI to correctly handle the vast majority of cases.

That leap from 50% to 97% is where most pilots fail.

Because that trust must be earned. By testing. By capturing edge cases. By proving that the output is consistently good.

And then, after scaling, you work together further towards 99%. Not by controlling less but by controlling smarter. By learning which cases indeed require extra attention.

Maintaining quality at scale

With 5 users, you can personally check if the output is good. Not with 50 users anymore.

The solution: teach users to check the quality themselves.

That means you have to agree together: what is quality? What is good enough? Where are the boundaries?

You need to have this conversation before you scale. Otherwise, you'll have 50 people with 50 different standards.

You can automate a quality check. But initially, you want to keep it human. So that people learn what they need to check and why.

"Human in the loop" doesn't become smaller when scaling—it becomes larger. More people who have to check. More people who need to understand what is good. Until you reach that 97% trust level—and build together towards 99%.

When is a pilot ready to scale?

Three signals:

1. The output is consistent

Not only in the easy cases but also in the edge cases. The pilot delivers the right output in multiple situations.

2. The standard is shared

Everyone who will use the tool knows what quality is. And agrees with it.

3. The end-users are informed

No surprises during the rollout. People know what's coming, why, and what it means for them.

Missing one? Then you're scaling too early.

Frequently Asked Questions

How long does the step from pilot to production take?

On average 2-4 months, depending on how many people you need to involve and how complex the edge cases are. Haste is counterproductive—scaling too soon leads to resistance.

Can we automate quality control?

Partially in the long run, but start human. People need to learn what quality is first before you can automate that. Otherwise, you're automating the wrong standard.

What if some people don't want to use the tool?

Often an indication that they weren't involved in determining the standard. Go back, ask for their input, and adjust where necessary. Coercion doesn't work.

Who should own the scaling?

A duo: someone for the people (support, communication) and someone for the content (quality, standard). Together they are the point of contact.

Scaling is the hardest step. The AI Kickstart guides you from pilot to production—inclusive of setting up ownership, quality standards, and support with the broader group.

Source: Harvard Business Review: Why Pilots Succeed and Scale-Ups Fail